RoboChallenge's Top-Ranked Embodied AI Model Goes Open Source, Challenging Clean Data Collection Paradigm

PR Newswire

BEIJING, Jan. 12, 2026

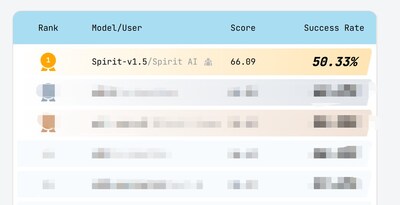

BEIJING, Jan. 12, 2026 /PRNewswire/ -- Spirit AI, an embodied AI startup, today announced that its latest VLA model, Spirit v1.5, has ranked first overall on the RoboChallenge benchmark. To drive industry transparency and collaborative growth, Spirit AI is open-sourcing its foundation model alongside the specific model weights and core evaluation code. This comprehensive release enables the global research community to independently verify the benchmark results and further explore the potential of Spirit v1.5 in advancing embodied intelligence.

RoboChallenge Leaderboard: https://robochallenge.cn/home

Open Source:

Code: https://github.com/Spirit-AI-Team/spirit-v1.5

Model: https://huggingface.co/Spirit-AI-robotics/Spirit-v1.5

Blog:https://www.spirit-ai.com/en/blog/spirit-v1-5

Spirit v1.5 was evaluated on RoboChallenge Table30. RoboChallenge is a standardized real-robot evaluation benchmark jointly initiated by organizations including Dexmal and Hugging Face, with the goal of assessing embodied AI systems under realistic execution conditions.

The tasks span everyday skills such as object insertion, food preparation, and multi-step tool use, and are evaluated across multiple robotic configurations, including single-arm and dual-arm systems with varying perception setups. The benchmark is designed to stress a model's ability in 3D localization, occlusion handling, temporal reasoning, long-horizon execution, and cross-robot generalization.

A Unified Vision-Language-Action Model for Real-World Execution

Spirit v1.5 is built on a unified Vision-Language-Action (VLA) architecture that integrates visual perception, language understanding, and action generation into a single end-to-end decision process. Unlike modular pipelines that separate perception, planning, and control, this unified approach reduces information loss and enables more consistent behavior across complex, multi-stage tasks.

A key technical focus of Spirit v1.5 is its data collection paradigm. Rather than relying on highly curated, scripted demonstrations, Spirit v1.5 is largely trained on open-ended, goal-driven diverse data, where operators pursue high-level objectives without predefined action scripts. This paradigm allows training data to naturally capture a continuous flow of skills, including task transitions, recovery behaviors, and interactions across varied objects and environments.

By learning from this unstructured and diverse experience, the model develops more transferable and generalizable policies, which later translate into stable performance on complex, multi-stage robotic tasks evaluated in real-world benchmarks.

Training on Diverse, Unscripted Real-World Data

In this data collection paradigm, operators are given high-level goals rather than scripted action sequences, allowing tasks to unfold naturally and organically. As a result, a single data session may contain a continuous stream of diverse atomic skills—such as grasping, inserting, twisting, opening containers, and coordinated bimanual actions—closely resembling real human environments.

This diversity enables the model to learn not isolated behaviors, but how skills connect and transition, forming a more general and transferable policy.

Improved Generalization and Transfer Efficiency

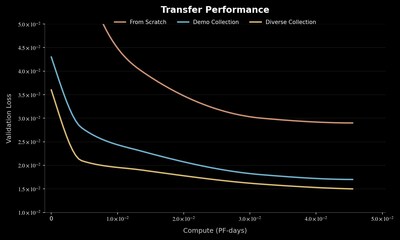

Results from recent ablation studies reveal a notable correlation between pre-training data variety and transfer efficiency. According to the data, models exposed to diverse, unscripted content during pre-training require significantly less time to master novel tasks during fine-tuning than their counterparts trained on scripted demonstrations. This efficiency gain was observed while maintaining identical data budgets across both cohorts.

These results suggest that task diversity, rather than task purity, is a critical driver for scalable embodied AI. As the volume of diverse experience increases, Spirit v1.5 continues to show improved performance on new tasks, supporting its role as a general-purpose embodied foundation model.

Open-Source Release and Reproducibility

In a move toward industry transparency, Spirit AI has released the model weights and source code utilized for the RoboChallenge evaluation. The open-source availability of these assets allows the research community to independently verify benchmark results. Furthermore, it provides a foundational framework for developers to extend Spirit v1.5, potentially accelerating advancements in embodied intelligence and robotics research.

About Spirit AI

Website: https://www.spirit-ai.com/en/

Spirit AI is a leading frontier startup dedicated to building the "universal brain" for embodied AI. The company focuses on developing advanced embodied large models to create general-purpose robotic companions for every household. By bridging cutting-edge AI with physical interaction, Spirit AI is driving the global transition toward the era of intelligent robotics.

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/robochallenges-top-ranked-embodied-ai-model-goes-open-source-challenging-clean-data-collection-paradigm-302658247.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/robochallenges-top-ranked-embodied-ai-model-goes-open-source-challenging-clean-data-collection-paradigm-302658247.html

SOURCE Spirit AI